QuerySet Extensions¶

MySQL-specific Model and QuerySet extensions. To add these to your

Model/Manager/QuerySet trifecta, see Installation. Methods

below are all QuerySet methods; where standalone forms are referred to,

they can be imported from django_mysql.models.

Approximate Counting¶

- django_mysql.models.approx_count(fall_back=True, return_approx_int=True, min_size=1000)¶

By default a QuerySet’s count() method runs SELECT COUNT(*) on a table. Whilst this is fast for

MyISAMtables, forInnoDBit involves a full table scan to produce a consistent number, due to MVCC keeping several copies of rows when under transaction. If you have lots of rows, you will notice this as a slow query - Percona have some more details.This method returns the approximate count found by running

EXPLAIN SELECT COUNT(*) .... It can be out by 30-50% in the worst case, but in many applications it is closer, and is good enough, such as when presenting many pages of results but users will only practically scroll through the first few. For example:>>> Author.objects.count() # slow 509741 >>> Author.objects.approx_count() # fast, with some error 531140

Three arguments are accepted:

- fall_back=True

If

Trueand the approximate count cannot be calculated,count()will be called and returned instead, otherwiseValueErrorwill be raised.The approximation can only be found for

objects.all(), with no filters,distinct()calls, etc., so it’s reasonable to fall back.

- return_approx_int=True

When

True, anintis not returned (excpet when falling back), but instead a subclass calledApproximateInt. This is for all intents and purposes anint, apart from when cast tostr, it renders as e.g. ‘Approximately 12345’ (internationalization ready). Useful for templates you can’t edit (e.g. the admin) and you want to communicate that the number is not 100% accurate. For example:>>> print(Author.objects.approx_count()) # ApproximateInt Approximately 531140 >>> print(Author.objects.approx_count() + 0) # plain int 531140 >>> print(Author.objects.approx_count(return_approx_int=False)) # plain int 531140

- min_size=1000

The threshold at which to use the approximate algorithm; if the approximate count comes back as less that this number,

count()will be called and returned instead, since it should be so small as to not bother your database. Set to0to disable this behaviour and always return the approximation.The default of

1000is a bit pessimistic - most tables won’t take long when callingCOUNT(*)on tens of thousands of rows, but it could be slow for very wide tables.

- django_mysql.models.count_tries_approx(activate=True, fall_back=True, return_approx_int=True, min_size=1000)¶

This is the ‘magic’ method to make pre-existing code, such as Django’s admin, work with

approx_count. Callingcount_tries_approxsets the QuerySet up such that then callingcountwill callapprox_countinstead, with the given arguments.To unset this, call

count_tries_approxwithactivate=False.To ‘fix’ an Admin class with this, simply do the following (assuming

Authorinherits fromdjango_mysql’sModel):class AuthorAdmin(ModelAdmin): def get_queryset(self, request): qs = super(AuthorAdmin, self).get_queryset(request) return qs.count_tries_approx()

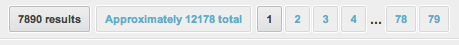

You’ll be able to see this is working on the pagination due to the word ‘Approximately’ appearing:

You can do this at a base class for all your

ModelAdminsubclasses to apply the magical speed increase across your admin interface.

Query Hints¶

The following methods add extra features to the ORM which allow you to access

some MySQL-specific syntax. They do this by inserting special comments which

pass through Django’s ORM layer and get re-written by a function that wraps the

lower-level cursor.execute().

Because not every user wants these features and there is a (small) overhead to every query, you must activate this feature by adding to your settings:

DJANGO_MYSQL_REWRITE_QUERIES = True

Once you’ve done this, the following methods will work.

- django_mysql.models.label(comment)¶

Allows you to add an arbitrary comment to the start of the query, as the second thing after the keyword. This can be used to ‘tag’ queries so that when they show in the slow_log or another monitoring tool, you can easily back track to the python code generating the query. For example, imagine constructing a QuerySet like this:

qs = Author.objects.label("AuthorListView").all()

When executed, this will have SQL starting:

SELECT /*AuthorListView*/ ...

You can add arbitrary labels, and as many of them as you wish - they will appear in the order added. They will work in

SELECTandUPDATEstatements, but not inDELETEstatements due to limitations in the way Django performs deletes.You should not pass user-supplied data in for the comment. As a basic protection against accidental SQL injection, passing a comment featuring

*/will raise aValueError, since that would prematurely end the comment. However due to executable comments, the comment is still prone to some forms of injection.However this is a feature - by not including spaces around your string, you may use this injection to use executable comments to add hints that are otherwise not supported, or to use MySQL 5.7+ optimizer hints.

- django_mysql.models.straight_join()¶

Adds the

STRAIGHT_JOINhint, which forces the join order during aSELECT. Note that you can’t force Django’s join order, but it tends to be in the order that the tables get mentioned in the query.Example usage:

# Note from Adam: sometimes the optimizer joined books -> author, which # is slow. Force it to do author -> books. Author.objects.distinct().straight_join().filter(books__age=12)[:10]

The MariaDB docs also have a good page Index Hints: How to Force Query Plans” which covers some cases when you might want to use

STRAIGHT_JOIN.

- django_mysql.models.sql_small_result()¶

Adds the

SQL_SMALL_RESULThint, which avoids using a temporary table in the case of aGROUP BYorDISTINCT.Example usage:

# Note from Adam: we have very few distinct birthdays, so using a # temporary table is slower Author.objects.values("birthday").distinct().sql_small_result()

- django_mysql.models.sql_big_result()¶

Adds the

SQL_BIG_RESULThint, which forces using a temporary table in the case of aGROUP BYorDISTINCT.Example usage:

# Note from Adam: for some reason the optimizer didn’t use a temporary # table for this, so we force it Author.objects.distinct().sql_big_result()

- django_mysql.models.sql_buffer_result()¶

Adds the

SQL_BUFFER_RESULThint, which forces the optimizer to use a temporary table to process the result. This is useful to free locks as soon as possible.Example usage:

# Note from Adam: seeing a lot of throughput on this table. Buffering # the results makes the queries less contentious. HighThroughputModel.objects.filter(x=y).sql_buffer_result()

- django_mysql.models.sql_cache()¶

Adds the

SQL_CACHEhint, which means the result set will be stored in the Query Cache. This only has an effect when the MySQL system variablequery_cache_typeis set to2orDEMAND.Warning

The query cache was removed in MySQL 8.0, and is disabled by default from MariaDB 10.1.7.

Example usage:

# Fetch recent posts, cached in MySQL for speed recent_posts = BlogPost.objects.sql_cache().order_by("-created")[:5]

Docs: MariaDB.

- django_mysql.models.sql_no_cache()¶

Adds the

SQL_NO_CACHEhint, which means the result set will not be fetched from or stored in the Query Cache. This only has an effect when the MySQL system variablequery_cache_typeis set to1orON.Warning

The query cache was removed in MySQL 8.0, and is disabled by default from MariaDB 10.1.7.

Example usage:

# Avoid caching all the expired sessions, since we’re about to delete # them deletable_session_ids = ( Session.objects.sql_no_cache().filter(expiry__lt=now()).values_list("id", flat=True) )

Docs: MariaDB.

- django_mysql.models.sql_calc_found_rows()¶

Adds the

SQL_CALC_FOUND_ROWShint, which means the total count of matching rows will be calculated when you only take a slice. You can access this count with thefound_rowsattribute of theQuerySetafter filling its result cache, by e.g. iterating it.This can be faster than taking the slice and then again calling

.count()to get the total count.Warning

This is deprecated in MySQL 8.0.17+.

Example usage:

>>> can_drive = Customer.objects.filter(age=21).sql_calc_found_rows()[:10] >>> len(can_drive) # Fetches the first 10 from the database 10 >>> can_drive.found_rows # The total number of 21 year old customers 1942

- django_mysql.models.use_index(*index_names, for_=None, table_name=None)¶

Adds a

USE INDEXhint, which affects the index choice made by MySQL’s query optimizer for resolving the query.Note that index names on your tables will normally have been generated by Django and contain a hash fragment. You will have to check your database schema to determine the index name.

If you pass any non-existent index names, MySQL will raise an error. This means index hints are especially important to test in the face of future schema changes.

for_restricts the scope that the index hint applies to. By default it applies to all potential index uses during the query; you may supply one of'JOIN','ORDER BY', or'GROUP BY'to restrict the index hint to only be used by MySQL for index selection in their respective stages of query execution. For more information see the MySQL/MariaDB docs (link below).table_nameis the name of the table that the hints are for. By default, this will be the name of the table of the model that theQuerySetis for, however you can supply any other table that may be joined into the query (from e.g.select_related()). Be careful - there is no validation on the table name, and if it does not exist in the final query it will be ignored. Also it is injected raw into the resultant SQL, so you should not use user data otherwise it may open the potential for SQL injection.Note that

USE INDEXaccepts no index names to mean ‘use no indexes’, i.e. table scans only.Example usage:

# SELECT ... FROM `author` USE INDEX (`name_12345`) WHERE ... >>> Author.objects.use_index("name_12345").filter(name="John") # SELECT ... FROM `author` USE INDEX (`name_12345`, `name_age_678`) WHERE ... >>> Author.objects.use_index("name_12345", "name_age_678").filter(name="John") # SELECT ... FROM `author` USE INDEX FOR ORDER BY (`name_12345`) ... ORDER BY `name` >>> Author.objects.use_index("name_12345", for_="ORDER BY").order_by("name") # SELECT ... FROM `book` INNER JOIN `author` USE INDEX (`authbook`) ... >>> Book.objects.select_related("author").use_index("authbook", table_name="author")

- django_mysql.models.force_index(*index_names, for_=None)¶

Similar to the above

use_index(), but adds aFORCE INDEXhint. Note that unlikeuse_index()you must supply at least one index name. For more information, see the MySQL/MariaDB docs.

- django_mysql.models.ignore_index(*index_names, for_=None)¶

Similar to the above

use_index(), but adds anIGNORE INDEXhint. Note that unlikeuse_index()you must supply at least one index name. For more information, see the MySQL/MariaDB docs.

‘Smart’ Iteration¶

Here’s a situation we’ve all been in - we screwed up, and now we need to fix the data. Let’s say we accidentally set the address of all authors without an address to “Nowhere”, rather than the blank string. How can we fix them??

The simplest way would be to run the following:

Author.objects.filter(address="Nowhere").update(address="")

Unfortunately with a lot of rows (‘a lot’ being dependent on your database server and level of traffic) this will stall other access to the table, since it will require MySQL to read all the rows and to hold write locks on them in a single query.

To solve this, we could try updating a chunk of authors at a time; such code tends to get ugly/complicated pretty quickly:

min_id = 0

max_id = 1000

biggest_author_id = Author.objects.order_by("-id")[0].id

while True:

Author.objects.filter(id__gte=min_id, id__lte=...)

# I'm not even going to type this all out, it's so much code

Here’s the solution to this boilerplate with added safety features - ‘smart’

iteration! There are two classes; one yields chunks of the given QuerySet,

and the other yields the objects inside those chunks. Nearly every data update

can be thought of in one of these two methods.

- class django_mysql.models.SmartChunkedIterator(queryset, atomically=True, status_thresholds=None, pk_range=None, chunk_time=0.5, chunk_size=2, chunk_min=1, chunk_max=10000, report_progress=False, total=None)¶

Implements a smart iteration strategy over the given

queryset. There is a methoditer_smart_chunksthat takes the same arguments on theQuerySetMixinso you can just:bad_authors = Author.objects.filter(address="Nowhere") for author_chunk in bad_authors.iter_smart_chunks(): author_chunk.update(address="")

Iteration proceeds by yielding primary-key based slices of the queryset, and dynamically adjusting the size of the chunk to try and take

chunk_timeseconds. In between chunks, thewait_until_load_low()method ofGlobalStatusis called to ensure the database is not under high load.Warning

Because of the slicing by primary key, there are restrictions on what

QuerySets you can use, and aValueErrorwill be raised if the queryset doesn’t meet that. Specifically, onlyQuerySets on models with integer-based primary keys, which are unsliced, and have noorder_bywill work.There are a lot of arguments and the defaults have been picked hopefully sensibly, but please check for your case though!

- queryset¶

The queryset to iterate over; if you’re calling via

.iter_smart_chunksthen you don’t need to set this since it’s the queryset you called it on.

- atomically=True

If true, wraps each chunk in a transaction via django’s

transaction.atomic(). Recommended for any write processing.

- status_thresholds=None

A dict of status variables and their maximum tolerated values to be checked against after each chunk with

wait_until_load_low().When set to

None, the default,GlobalStatuswill use its default of{"Threads_running": 10}. Set to an empty dict to disable status checking - but this is not really recommended, as it can save you from locking up your site with an overly aggressive migration.Using

Threads_runningis the most recommended variable to check against, and is copeid from the default behaviour ofpt-online-schema-change. The default value of 10 threads is deliberately conservative to avoid locking small database servers. You should tweak it up based upon the live activity of your server - check the running thread count during normal traffic and add some overhead.

- pk_range=None

Controls the primary key range to iterate over with slices. By default, with

pk_range=None, the QuerySet will be searched for its minimum and maximumpkvalues before starting. On QuerySets that match few rows, or whose rows aren’t evenly distributed, this can still execute a long blocking table scan to find these two rows. You can remedy this by giving a value forpk_range:If set to

'all', the range will be the minimum and maximum PK values of the entire table, excluding any filters you have set up - that is, forModel.objects.all()for the givenQuerySet’s model.If set to a 2-tuple, it will be unpacked and used as the minimum and maximum values respectively.

Note

The iterator determines the minimum and maximum at the start of iteration and does not update them whilst iterating, which is normally a safe assumption, since if you’re “fixing things” you probably aren’t creating any more bad data. If you do need to process every row then set

pk_rangeto have a maximum far greater than what you expect would be reached by inserts that occur during iteration.

- chunk_time=0.5

The time in seconds to aim for each chunk to take. The chunk size is dynamically adjusted to try and match this time, via a weighted average of the past and current speed of processing. The default and algorithm is taken from the analogous

pt-online-schema-changeflag –chunk-time.

- chunk_size=2

The initial size of the chunk that will be used. As this will be dynamically scaled and can grow fairly quickly, the initial size of 2 should be appropriate for most use cases.

- chunk_min=1

The minimum number of objects in a chunk. You do not normally need to tweak this since the dynamic scaling works very well, however it might be useful if your data has a lot of “holes” or if there are other constraints on your application.

- chunk_max=10000

The maximum number of objects in a chunk, a kind of sanity bound. Acts to prevent harm in the case of iterating over a model with a large ‘hole’ in its primary key values, e.g. if only ids 1-10k and 100k-110k exist, then the chunk ‘slices’ could grow very large in between 10k and 100k since you’d be “processing” the non-existent objects 10k-100k very quickly.

- report_progress=False

If set to true, display out a running counter and summary on

sys.stdout. Useful for interactive use. The message looks like this:AuthorSmartChunkedIterator processed 0/100000 objects (0.00%) in 0 chunksAnd uses

\rto erase itself when re-printing to avoid spamming your screen. At the endFinished!is printed on a new line.

- total=None

By default the total number of objects to process will be calculated with

approx_count(), withfall_backset toTrue. Thiscount()query could potentially be big and slow.totalallows you to pass in the total number of objects for processing, if you can calculate in a cheaper way, for example if you have a read-replica to use.

- class django_mysql.models.SmartIterator¶

A convenience subclass of

SmartChunkedIteratorthat simply unpacks the chunks for you. Can be accessed via theiter_smartmethod ofQuerySetMixin.For example, rather than doing this:

bad_authors = Author.objects.filter(address="Nowhere") for authors_chunk in bad_authors.iter_smart_chunks(): for author in authors_chunk: author.send_apology_email()

You can do this:

bad_authors = Author.objects.filter(address="Nowhere") for author in bad_authors.iter_smart(): author.send_apology_email()

All the same arguments as

SmartChunkedIteratorare accepted.

- class django_mysql.models.SmartPKRangeIterator¶

A subclass of

SmartChunkedIteratorthat doesn’t return the chunk’sQuerySetbut instead returns the start and end primary keys for the chunk. This may be useful when you want to iterate but the slices need to be used in a raw SQL query. Can be accessed via theiter_smart_pk_rangesmethod ofQuerySetMixin.For example, rather than doing this:

for authors_chunk in Author.objects.iter_smart_chunks(): limits = author_chunk.aggregate(min_pk=Min("pk"), max_pk=Max("pk")) authors = Author.objects.raw( """ SELECT name from app_author WHERE id >= %s AND id <= %s """, (limits["min_pk"], limits["max_pk"]), ) # etc...

…you can do this:

for start_pk, end_pk in Author.objects.iter_smart_pk_ranges(): authors = Author.objects.raw( """ SELECT name from app_author WHERE id >= %s AND id < %s """, (start_pk, end_pk), ) # etc...

In the first format we were forced to perform a dumb query to determine the primary key limits set by

SmartChunkedIterator, due to theQuerySetnot otherwise exposing this information.Note

There is a subtle difference between the two versions. In the first the end boundary,

max_pk, is a closed bound, whereas in the second, theend_pkfromiter_smart_pk_rangesis an open bound. Thus the<=changes to a<.All the same arguments as

SmartChunkedIteratorare accepted.

Integration with pt-visual-explain¶

How does MySQL really execute a query? The EXPLAIN statement

(docs: MySQL /

MariaDB),

gives a description of the execution plan, and the pt-visual-explain

tool

can format this in an understandable tree.

This function is a shortcut to turn a QuerySet into its visual explanation,

making it easy to gain a better understanding of what your queries really end

up doing.

- django_mysql.models.pt_visual_explain(display=True)¶

Call on a

QuerySetto print its visual explanation, or withdisplay=Falseto return it as a string. It prepends the SQL of the query with ‘EXPLAIN’ and passes it through themysqlandpt-visual-explaincommands to get the output. You therefore need the MySQL client and Percona Toolkit installed where you run this.Example:

>>> Author.objects.all().pt_visual_explain() Table scan rows 1020 +- Table table myapp_author

Can also be imported as a standalone function if you want to use it on a

QuerySetthat does not have theQuerySetMixinadded, e.g. for built-in Django models:>>> from django_mysql.models import pt_visual_explain >>> pt_visual_explain(User.objects.all()) Table scan rows 1 +- Table table auth_user